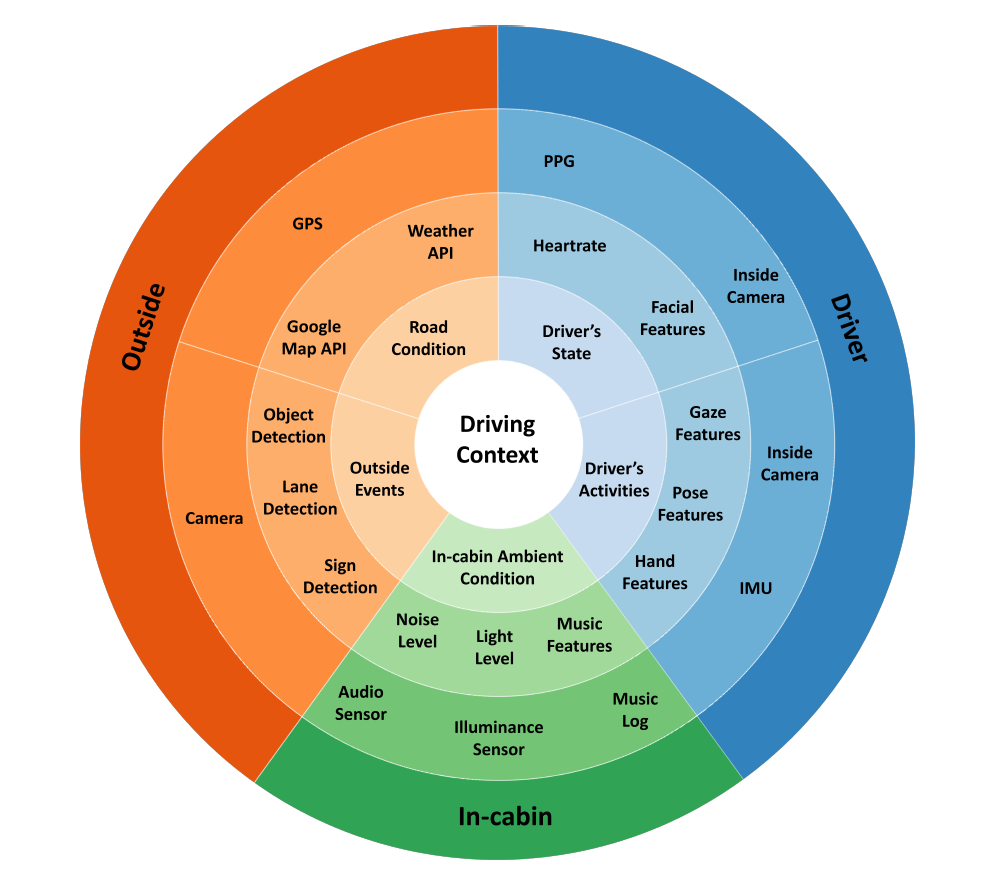

While Autonomous Vehicles (AVs) are currently being tested on carefully plotted out test areas, they cannot quite mimic the way real people feel when they drive. The challenge is to infuse cars with the intelligence they need to understand humans and take decisions that align with human preferences. Current autonomous vehicles deliver the task of driving through shared-autonomy, which is the result of a collaboration between the human driver and the vehicle. Effective shared autonomy requires a clear understanding of driver’s behavior, which is driven by multiple psychophysiological and environmental variables. Naturalistic Driving Studies (NDS) have shown to be an effective approach to understanding the driver’s state and behavior in real-world scenarios. However, due to the lack of technological and computing capabilities, former NDS only focused on vision-based approaches, ignoring important psychophysiological factors such as cognition and emotion.