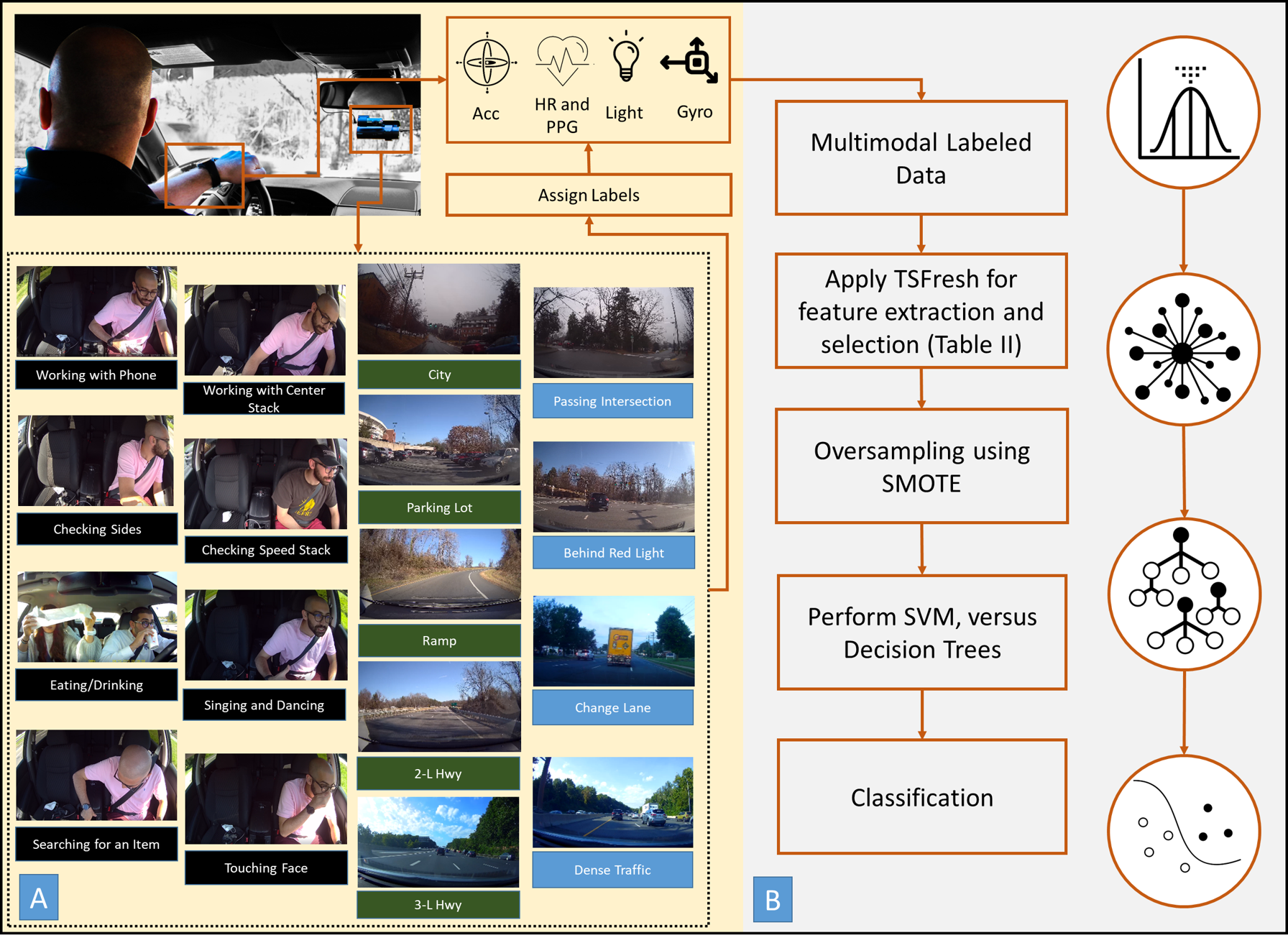

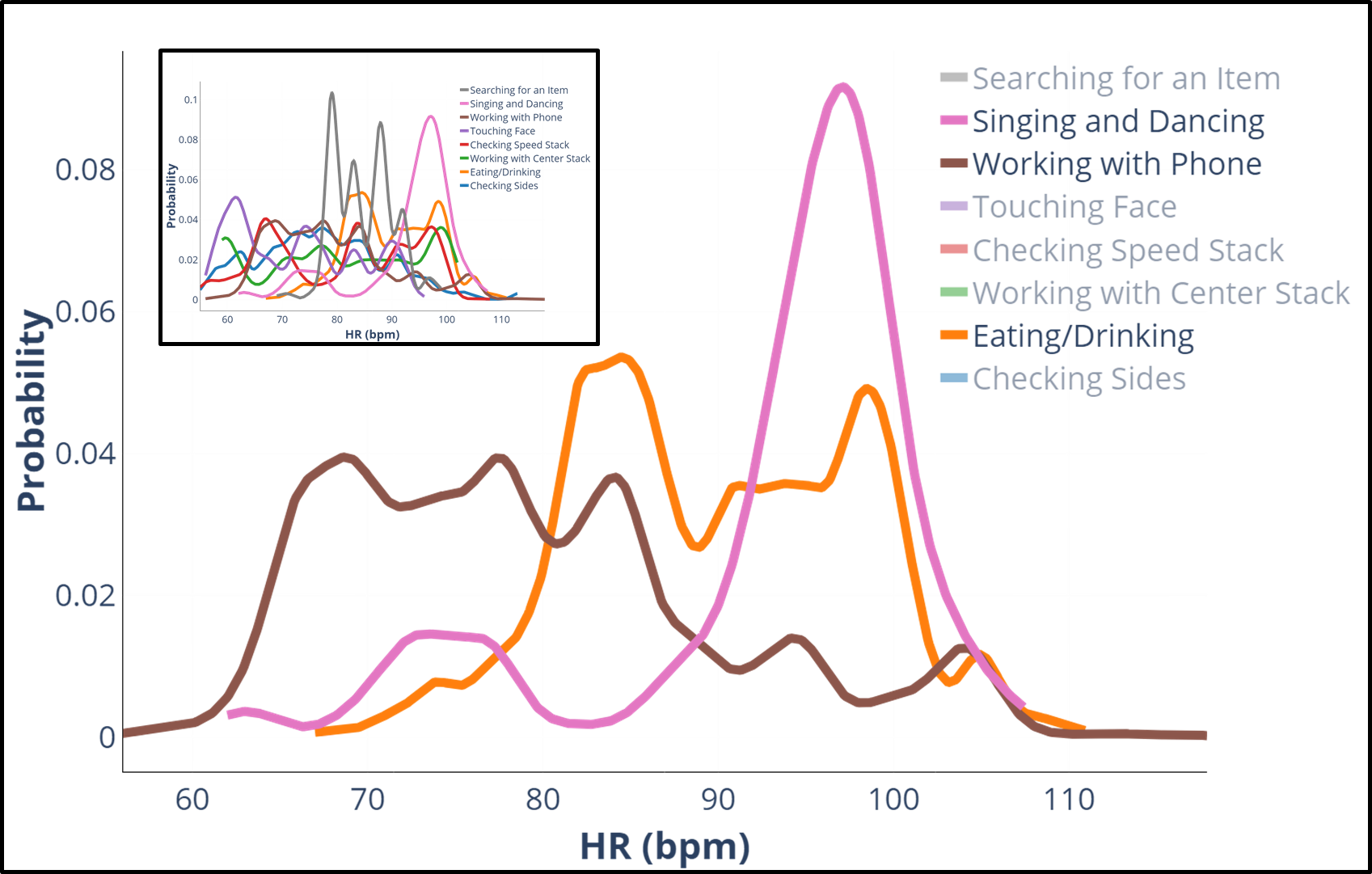

Integrating driver, in-cabin, and outside environment’s contextual cues into the vehicle’s decision making is the centerpiece of semi-automated vehicle safety. Multiple systems have been developed for providing context to the vehicle, which often rely on video streams capturing drivers’ physical and environmental states. While video streams are a rich source of information, their ability in providing context can be challenging in certain situations, such as low illuminance environments (e.g., night driving), and they are highly privacy-intrusive. In this study, we leverage passive sensing through smartwatches for classifying elements of driving context.

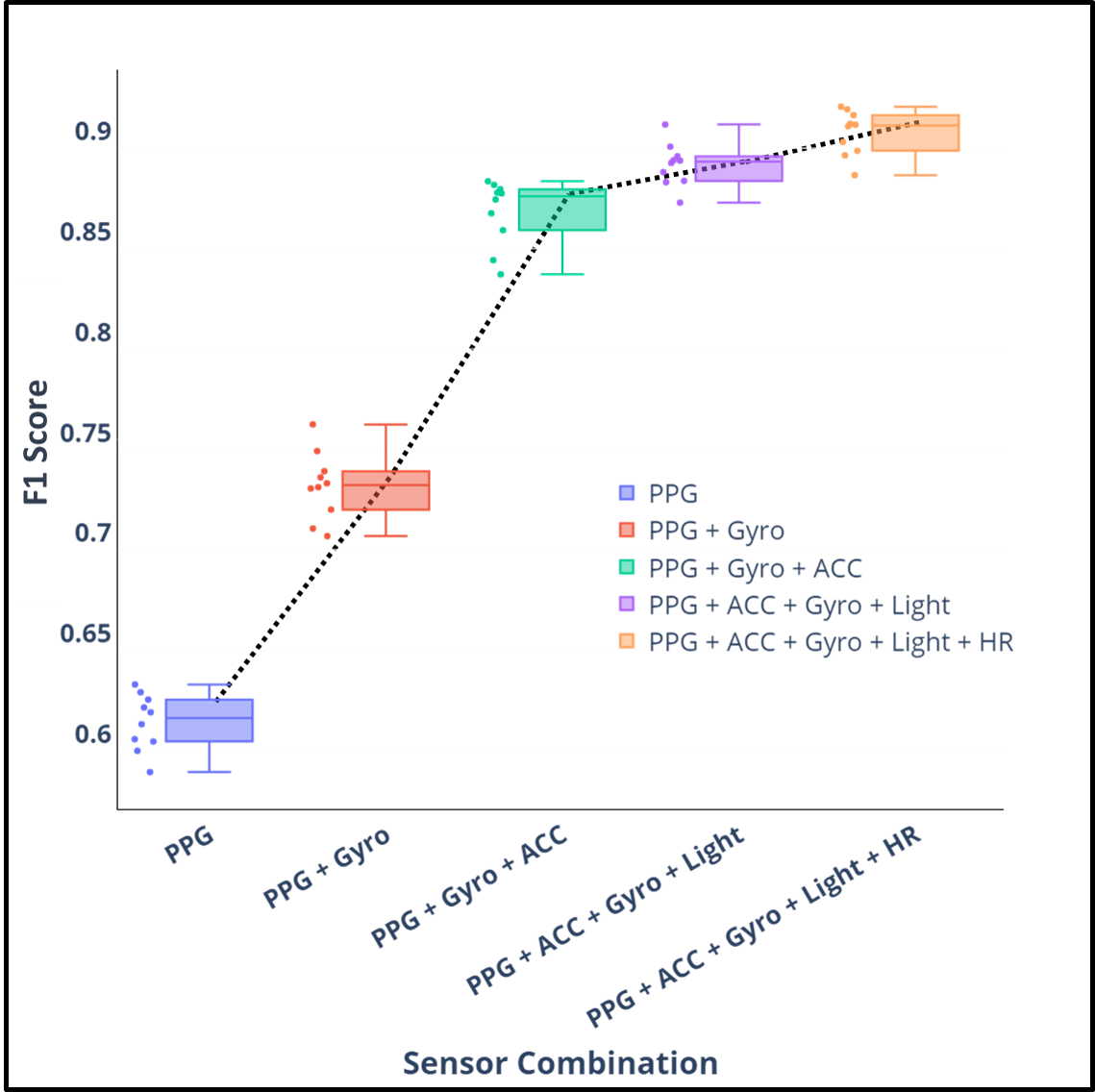

Specifically, through using the data collected from 15 participants in a naturalistic driving study, and by using multiple machine learning algorithms such as random forest, we classify driver’s activities (e.g., using phone and eating), outside events (e.g., passing intersection and changing lane), and outside road attributes (e.g., driving in a city versus a highway) with an average F1 score of 94.55, 98.27, and 97.86 % respectively, through 10-fold cross-validation.

Our results show the applicability of multimodal data retrieved through smart wearable devices in providing context in real-world driving scenarios and pave the way for a better shared autonomy and privacy-aware driving data-collection, analysis, and feedback for future autonomous vehicles.

This work was presented at the IEEE Intelligent Vehicles 2021 Conference. Watch our full presentation!

Related Publication

If you are interested in this research please read our recent publication on this topic published in IEEE Intelligent Vehicles (IV) 2021:

- Tavakoli, Arash, Shashwat Kumar, Mehdi Boukhechba, and Arsalan Heydarian. “Driver State and Behavior Detection Through Smart Wearables.” IEEE IV (2021).

Arsalan Heydarian

Principal Investigator

Arash Tavakoli

Graduate Research Assistant