This project aims at building multi-modal driver’s activity recognition based on naturalistic driving data. Such models can be used to correctly classify what the driver is doing at each moment. This can help us further analyze driving behaviors under specific activities including both primary (e.g. changing lane) and secondary activities (e.g. working with phone).

Summary

To be able to build such models, we take advantage of human annotators to build ground truth of driver’s actions in real-life driving scenarios. A subset of the collected data from each participant is annotated to detect various activities. The activities that we are targeting are changing lane, turning, checking mirror (three separate categories for each mirror), working with phone, talking on phone, holding items, eating, drinking, dancing, and singing. For each one of these categories we provide multi modal data including driver’s video, road video, driver’s hand acceleration, gyroscope data, and driver’s heart rate.

How do we model driver's activity?

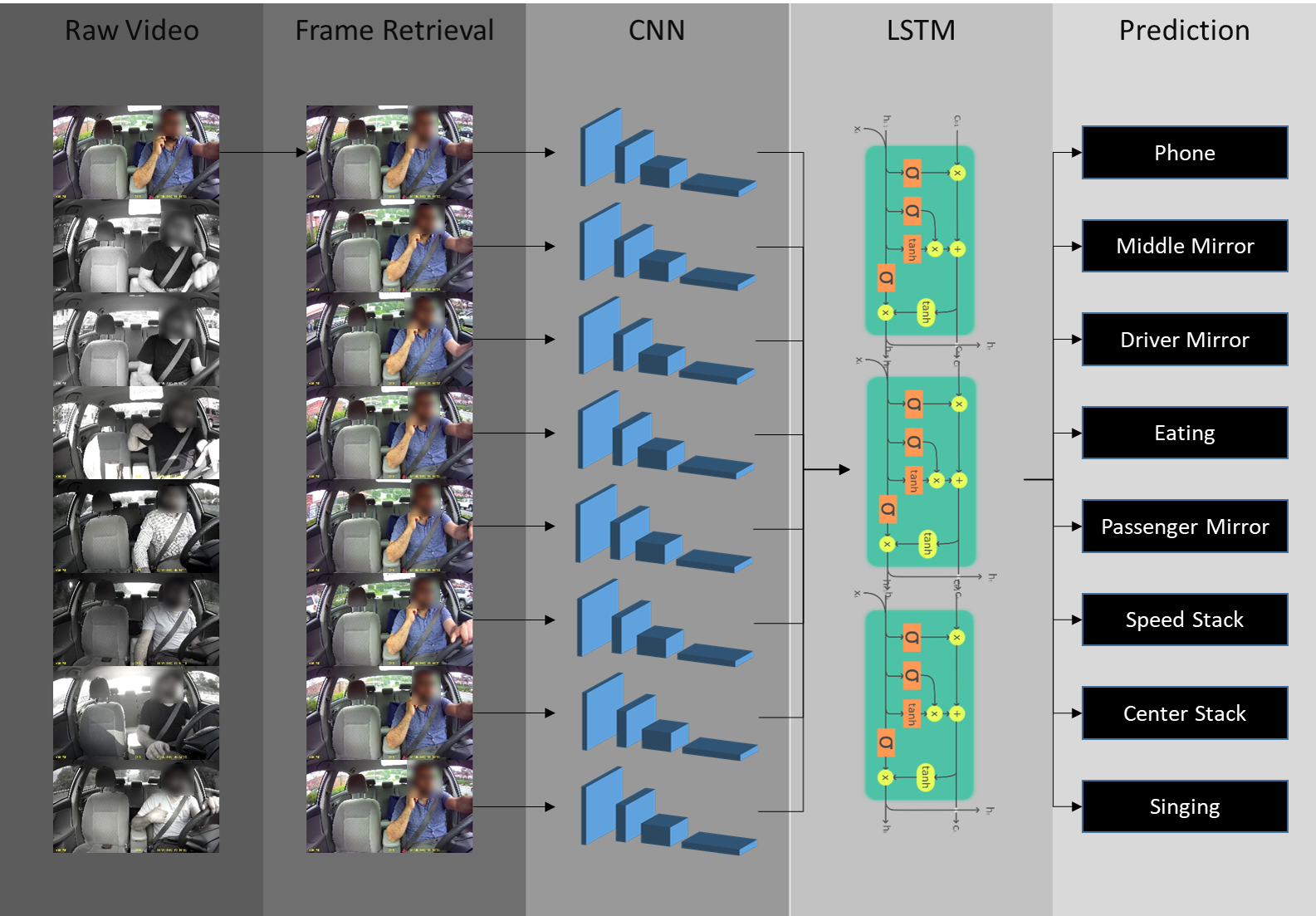

For video-based activity recognition we take advantage of the power of Convolutional Neural Networks (CNN) together with Long-Short-Term-Memory (LSTM). We use CNNs for extracting features from each frame, then we will give the activity a temporal aspect by combining it with other frames using LSTM.

Arsalan Heydarian

Principal Investigator

Arash Tavakoli

Graduate Research Assistant

Xiang Guo

Graduate Research Assistant